We have all probably heard the term “big data,” another term that can be many things to many different people.

But what is BIG DATA and what’s its impact on the future of cost estimating?

Larry Dysert, the Cost Estimating thought leader has opened up the concept of Big Data and how it will impact the future of cost estimating.

Watch the video below for more details.

Download the free audio mp3 podcast of this episode on iTunes

Note: This video is a sneak peek of the presentation on “Emerging Trends and their Impact on the Future of Cost Estimating”, presented by Larry Dysertat the 2020 Project Control Summit.

To get access to the full presentation and other recordings of the Project Control Summit, please click here.

Video Transcript:

What is BIG DATA?

Here is a general definition of Big Data:

“Big data sets are simply vast amounts of data that are too big for humans to deal with without the help of specialized machines or tools.” Project Controls and Data Analytics in the Era of Industry 4.0; Arrow, Markhus, Singh, Ramos, Bakker; AACE Source April 2019

The term Big Data was introduced by Roger Mougalas back in 2005. However, the application of big data and the quest to understand the available data is something that has been in existence for a long time.

Capturing Big Data in the Past

We’ve always been able to capture data.

When I first started working for an EPC firm, back in the 1980s, they had a vast library. Almost half of a floor of a building dedicated to the library that collected project closeout reports.

At that time, each of those project closeout reports comprised four or five, multiple wide binders containing a combination of mostly handwritten reports, maybe some typed reports, and usually some very poor-quality copies of existing projects’ supporting documents.

It was difficult and time consuming for me as an estimator to find the data I was looking for. And at that time, it was challenging to try to work up the data analysis. I was trying to manually perform calculations from the information I was getting, using a ten-key calculator.

But things have changed since then!

Capturing Big Data Today

Just a few years ago, we may have thought we were all-stars, being able to create pivot tables and run a regression analysis using spreadsheets.

Well, now we’re on the verge of using advanced technologies such as artificial intelligence and machine learning in sorting through and analyzing big data.

Big Data Technologies

Artificial Intelligence and Machine Learning

Using artificial intelligence and machine learning capabilities, you can quickly sort through years of digital project records. You can find the specific project data that match your new project’s characteristics, and automatically normalize the data for time, location, and currency by accessing historical and current economic indices.

So organizations need to prepare for the collection of big data, big data technologies, and technologists.

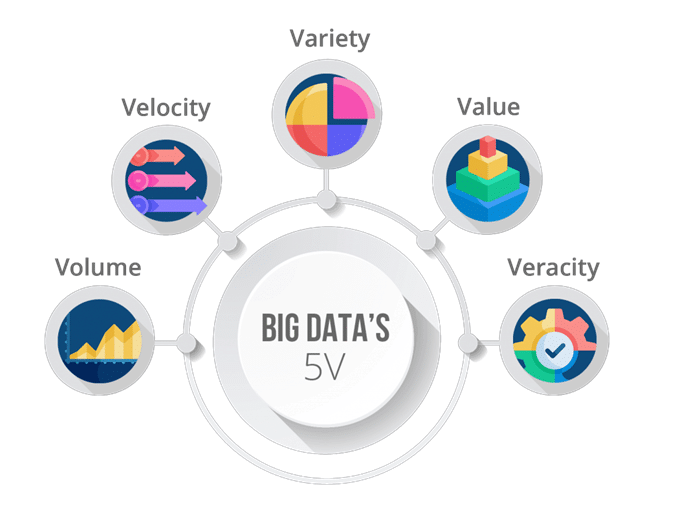

The 5V’s of Big Data

To understand the Big Data, let’s explore its 5V’s : Volume, Velocity, Variety, Veracity, and Value

Volume of Data:

Volume refers to the volume of data to be collected and stored and identifying where to store data.

Obviously, data storage capabilities have increased exponentially and still growing while getting less expensive all the time. So, we’re all going to get used to the terms of terabytes, petabytes, exabytes, zettabytes, etc.

The bottom line is that you have the information to store vast amounts of your historical project records inexpensively.

Therefore, you need to start collecting the projects’ data now. The analysis may be in the gun of the future. For now, let’s start collecting the data, so we have it available.

Variety of Data:

No longer constrained by the volume of data, you can start collecting both structured data and unstructured data.

Variety refers to the nature of the data that is structured, semi-structured, and unstructured.

The unstructured data can be analyzed by the computing power, by our AI and machine learning systems, to organize data as needed in the future.

Greater computing power is going to make sense out of all this unstructured data. You can start collecting not only paper data, computer records, but also you can begin collecting video presentations of meetings, audio meeting minutes, etc. All of this data will eventually be able to be handled by these new technologies.

Velocity of Data:

You also need to be concerned with the data velocity, the speed at which data is created and captured.

Nowadays, you can collect a lot of data in real-time instead of just adding a set interval, which has been common in the past.

So now I can track actual hours that construction equipment is being utilized on a minute by minute basis for a current project. I can refer to that and use that information to help support estimating the construction equipment costs as part of the overall construction indirect costs for a new project.

Veracity of Data:

Veracity refers to inconsistencies and uncertainty in data. Available data can sometimes get messy, and quality and accuracy are difficult to control.

However, I’m going to rely on artificial intelligence and machine learning algorithms, which can sort through this data and discover and identify where I might have gaps or errors in the data.

Variability/ Value of Data:

Data in itself is of no use or importance, but it needs to be converted into something valuable to extract information.

In the context of big data, value amounts to how worthy the data is of positively impacting a company’s business. This is where big data analytics come into the picture.

With the help of advanced data analytics, artificial intelligence, and machine learning concepts, useful insights can be derived from the collected data. These insights, in turn, are what add value to the decision-making process.

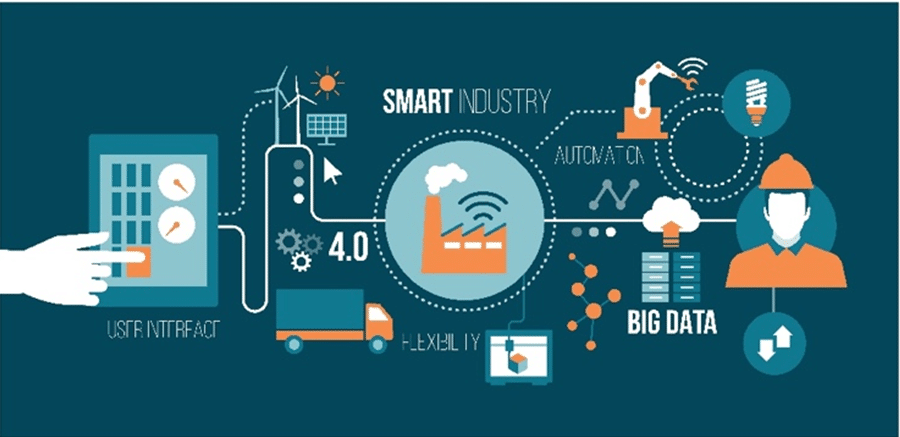

The Internet of things (IoT)

Another new technology that is related to Big Data is the Internet of Things (IoT).

The Internet of Things (IoT) is related to the connectivity of the data, devices, and machines to transfer data over a network without requiring human-to-human or human-to-computer interaction.

IoT is what makes your smart homework. It turns on the lights when you enter a room. It may automatically start your car simply as you approach the door.

IoT is a technology that is supplemented by things such as Near-Field Communication (NFC) devices, Radio-Frequency Identification (RFID), and other sensors.

These are the things that are going to allow you to determine where the construction equipment is utilized on your project, and how often it’s utilized. The near field communication device which is attached to the equipment communicates back to a database and can track the idle time when the equipment is used, where it’s used, etc. All of that information may be useful to me as an estimator to help prepare my estimates.

It’s not unrealistic to consider that eventually, all the major components installed on a facility will have a radio frequency identification device attached to it. That’s going to identify when it was purchased when it was installed, and when it was last maintained.

Every worker at the construction site may have a badge or an ID, also containing an RFID device; communicating on his daily activities, the tools he’s using, and the materials he’s requesting.

We’re going to have access to all of this real-time data for similar projects that we as estimators can then use to better plan with determining the best hours to use in our estimate, to install that lineal meter of pipe or that cubic meter of concrete foundation. I’ll have better information to understand what true productivity adjustment was when piping installed on the fifth level of a structure, as opposed to the ground level of a structure.

We’re going to be in a position to vastly improve our estimating capabilities, to cost price, and validate estimates.

The Impact of Big Data on Cost Estimating

In this data-driven future, we’re going to be able to collect in a digital environment, much more data and information than we ever could with paper.

What do you do with the data?

Well, you’re going to be able to enhance your data collection through data analysis.

Artificial Intelligence and Advanced Analytics

The amount of data will be overwhelming. It’s going to be Data Mining, Artificial Intelligence, Data Analytics, and the development of predictive cost models that will turn the collected data into useful information, and as a result, improve our estimating capabilities and support better decision-making.

Artificial intelligence and machine learning capabilities will find the data, sort through it, normalize it, see if it meets quality expectations, and provide the analytics to get us the answers that we as the estimators are looking for.

For example, I’ll be able to access every purchase order for every project across the organization in any continent to develop a model for estimating the cost of a heat exchanger. Another example would be quickly accessing the online vendor catalogs of 50 different vendors to obtain a current price for a 6-inch gate valve.

I’ll be able to access government and other economic indices to generate the best escalation forecast for every category of the commodity in my estimate based on macroeconomic models of raw material pricing and expected labor conditions, and other market conditions.

So the Artificial Intelligence engine is going to be able to research detailed project records to more accurately determine the adjustments that affect labor productivity for heights congestion, the distance of the work from the laydown areas, and just a myriad of other considerations.

We’re going to have the data available. We need to be able to describe what we want out of the data. Then our computing power is going to be able to find, sort, and present that information back to us.

Conclusion

Fuzzy logic, Artificial neural networks, Regression analysis, Case-based reasoning, Random forest, Evolutionary computing, and Machine learning are the technologies that will enable advanced analytics that we can apply in our estimating processes.

However, we don’t have to be experts in any of those technologies. We’re going to rely on the information management specialists to implement those solutions for us.

What we do need to understand as estimators are the available data that is out there, which specific data to combine to provide the information and the metrics that we are interested in. The technology will work for us to get the answer we’re looking for.

We’re going to be in a much better position to compare similar current and historical projects based on cost, schedule, and other performance metrics to support estimate validations. We’re going to be able to access normalized historical data in a great way.

These technologies are not going to replace us as estimators. They’re going to augment our capabilities, so we can spend more time on the analysis rather than that tedious work of scope, definition, costing, and pricing.

Therefore, advanced analytics will support improved information and validation, which provides better information to support decision making that relies on our cost estimates.

We will be able to enhance cost estimates through these types of technologies and these improved predictive metrics.

About the Author, Larry Dysert

Larry R. Dysert is a sought after speaker and author on cost estimating and cost engineering topics. He brings over 30 years of professional experience in cost estimating, management, project consulting, and training experience in a wide variety of industries. Currently, he is serving as the managing partner in Conquest Consulting Group, providing consulting services to process industry owners on recommended best practices for estimating, project controls, and project benchmarking. Larry has been responsible for the preparation of conceptual and detailed estimates for capital projects, domestic and international, ranging to over $25 billion in size.

Active in AACE International, Larry is currently serving as the VP of AACE’s Technical Board. Larry is also a Fellow of AACE International, a recipient of AACE’s Total Cost Management Award, a recipient of AACE Award of Merit, and an Honorary Lifetime Member. Larry was the Chair for the task force that developed AACE’s Certified Estimating Professional Program.

Larry has specialized in estimates of various project sizes, strategic importance, utilizing new technologies, and those involved in the use of parametric estimating methodologies. Larry has presented training seminars in estimating, cost metrics analysis, risk analysis, and Total Cost Management to process industry companies around the world.

Connect with Larry via LinkedIn or his website.

![[Free 90-min Masterclass] The Ultimate Leadership Recipe for Project Professionals](https://www.projectcontrolacademy.com/wp-content/uploads/2024/08/4-1024x576.jpg)